A "Hello, World!" program is generally a simple computer program which outputs to the screen a message similar to "Hello, World!" while ignoring any user input. A small piece of code in most general-purpose programming languages, this program is used to illustrate a language's basic syntax. A "Hello, World!" program is often the first written by a student of a new programming language, but such a program can also be used as a sanity check to ensure that the computer software intended to compile or run source code is correctly installed, and that its operator understands how to use it.

"Hello, World!" program handwritten in the C language and signed by Brian Kernighan (1978)

A "Hello, World!" program running on Sony's PlayStation Portable as a proof of concept

CNC machining test in Perspex

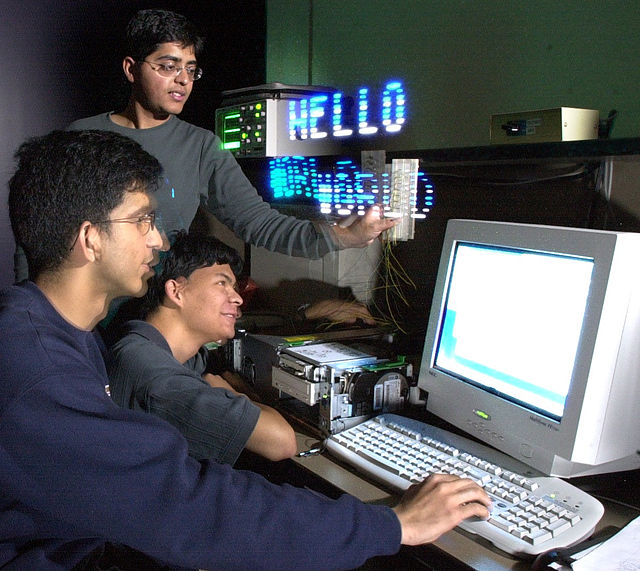

A "Hello, World!" message being displayed through long-exposure light painting with a moving strip of LEDs

A computer program is a sequence or set of instructions in a programming language for a computer to execute. It is one component of software, which also includes documentation and other intangible components.

Lovelace's description from Note G

Glenn A. Beck changing a tube in ENIAC

Switches for manual input on a Data General Nova 3, manufactured in the mid-1970s

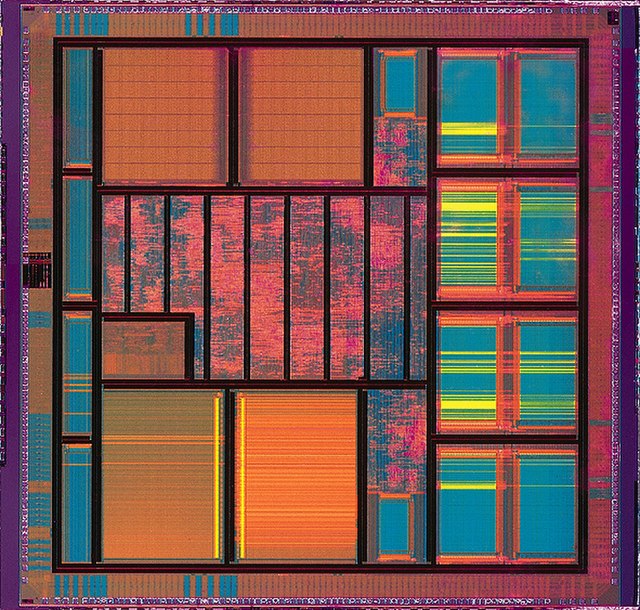

A VLSI integrated-circuit die