Entropy is a scientific concept that is most commonly associated with a state of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynamics, where it was first recognized, to the microscopic description of nature in statistical physics, and to the principles of information theory. It has found far-ranging applications in chemistry and physics, in biological systems and their relation to life, in cosmology, economics, sociology, weather science, climate change, and information systems including the transmission of information in telecommunication.

Rudolf Clausius (1822–1888), originator of the concept of entropy

Thermodynamics is a branch of physics that deals with heat, work, and temperature, and their relation to energy, entropy, and the physical properties of matter and radiation. The behavior of these quantities is governed by the four laws of thermodynamics, which convey a quantitative description using measurable macroscopic physical quantities, but may be explained in terms of microscopic constituents by statistical mechanics. Thermodynamics applies to a wide variety of topics in science and engineering, especially physical chemistry, biochemistry, chemical engineering and mechanical engineering, but also in other complex fields such as meteorology.

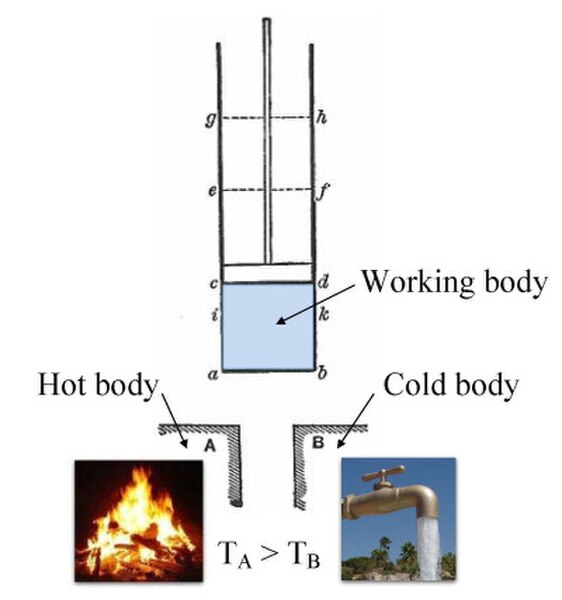

Annotated color version of the original 1824 Carnot heat engine showing the hot body (boiler), working body (system, steam), and cold body (water), the letters labeled according to the stopping points in Carnot cycle

Opening a bottle of sparkling wine (high-speed photography). The sudden drop of pressure causes a huge drop of temperature. The moisture in the air freezes, creating a smoke of tiny ice crystals.