A floppy disk or floppy diskette is a type of disk storage composed of a thin and flexible disk of a magnetic storage medium in a square or nearly square plastic enclosure lined with a fabric that removes dust particles from the spinning disk. Floppy disks store digital data which can be read and written when the disk is inserted into a floppy disk drive (FDD) connected to or inside a computer or other device.

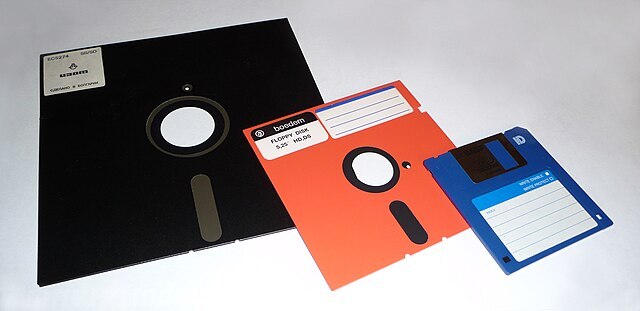

8-inch, 5¼-inch, and 3½-inch floppy disks

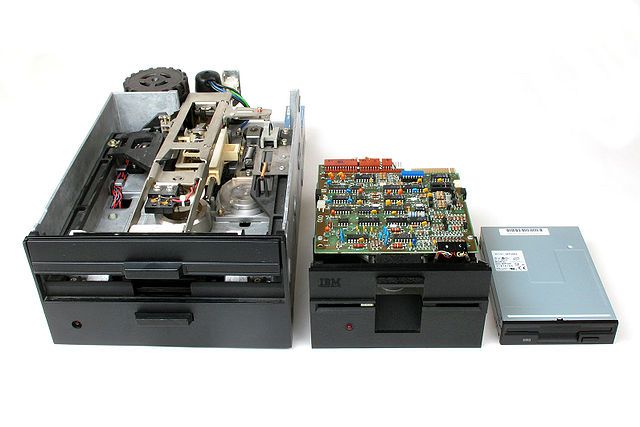

8-inch, 5¼-inch (full height), and 3½-inch drives

A 3½-inch floppy disk removed from its housing

8-inch floppy disk, inserted in drive, (3½-inch floppy diskette, in front, shown for scale)

Disk storage is a data storage mechanism based on a rotating disk. The recording employs various electronic, magnetic, optical, or mechanical changes to the disk's surface layer. A disk drive is a device implementing such a storage mechanism. Notable types are hard disk drives (HDD), containing one or more non-removable rigid platters; the floppy disk drive (FDD) and its removable floppy disk; and various optical disc drives (ODD) and associated optical disc media.

Six hard disk drives

Three floppy disk drives

A CD-ROM (optical) disc drive